Here’s a question:

If you were a movie buff what would interest you more: watching the Oscars live or checking out the headlines a day later? I bet you would go for the former every time, especially if you are a big Leonardo DiCaprio fan (he finally won the Best Actor).

Like humans companies can also see value in real time.

If you are a utility it would be infinitely more useful if you could immediately find out about a power outage in a particular area vs. finding out about it when customers start jamming the help lines.

If you are a credit card company detecting fraud in real time is an absolutely critical part of your business operations.

The demands for better and more accurate real time data processing will only increase as more and more companies generate big data, implement IoT, and make major investments in predictive analytics.

But there is a problem.

Batch vs. stream processing

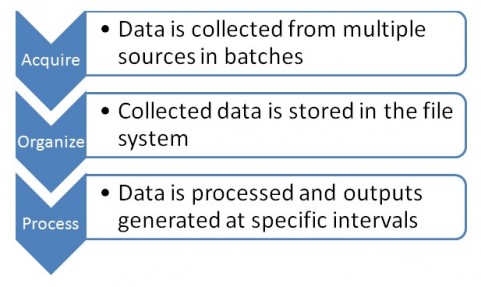

Traditionally databases have operated in the batch mode.

Take the Hadoop based databases that many companies are using to execute their Big Data plans. Here’s how these systems basically work

The interval might be a few minutes to a day depending on the business need, but there’s always a time lag between the raw data being generated and insights being available.

For a number of tasks like clearing of checks, generating bills or wire transfers batch processing works well enough.

This process generates accurate results and can scale up as much as you want it to but for all intents and purposes you will be behind the curve, analyzing events AFTER they have taken place.

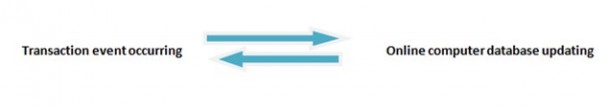

In contrast, if you were to use an open source stream processing system like Apache Storm here’s what the entire data processing workflow looks like

As you can see this process happens in real time, without any time gap.

This workflow is increasingly being used in multiple use cases where speed equals money. Take analysis of satellite data, for instance:

Satellite images add up to a lot of data — in DigitalGlobe Inc.’s case, 50 TB of geospatial imagery on a daily basis…(It) augments that with text data from 10 to 15 million geo-tagged Twitter posts every day in an effort to get a better sense of what’s happening on the ground worldwide.

Quickly mining and analyzing all that information has been a challenge, though…But the company is looking to speed things up by streaming data for analysis through a combination of big data, stream processing and cloud computing technologies….

The goal is to …go from reporting on events to anticipating them, and ultimately changing outcomes. Real time counting of cars in parking lots at shopping malls could provide an indicator of retail traffic.

Here’s another example of how health insurance companies are using real time event processing:

Medical claims are processed promptly by insurers so providers can send final bills to patients and get payments back in a timely manner …(but) claims frequently get held up at the approval stage because of eligibility questions and other issues.

Predictive models can look for claims that are likely to be flagged… In batch mode, it can take two to three hours to use incoming data to score a predictive model for accuracy.

In tests, the stream processing technology has been able to score models in seconds…from waiting an entire day (it is possible) to get a result… (in) minutes.

Imagine how much a customer would love you if they can get their medical claims processed in a few minutes instead of days?

Some other applications of real time big data processing include:

- Pinpointing safe places for landing zones for a Special Forces team to parachute into based on real time information of the target location.

- Policing exclusion zones in oceans to monitor presence of illegal vessels.

- Performing predictive maintenance in refineries and spot equipment failure ahead of time based on sensor data.

- Improving fraud detection mechanisms.

- Identifying damaged buildings after natural disasters.

Conclusion

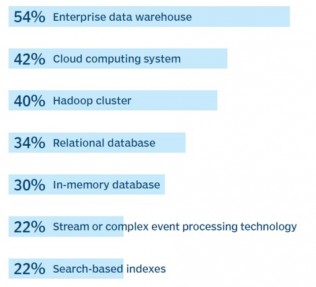

Stream processing is still very niche among companies who have adopted Big Data: a Gartner study found out that 22% of respondents were interested in real time data processing.

Most companies still don’t have a pressing need for real time data analytics, at least across all use cases. It would make sense for them to focus on conventional batch processing of Big Data.

Besides, stream processing entails wholesale changes in business processes and the availability of specialized IT talent to architect and build the underlying technical infrastructure. This means that management has to sign off.

But for the use cases where speed can help deliver a better customer experience stream processing can be a force multiplier, helping you actually move faster.